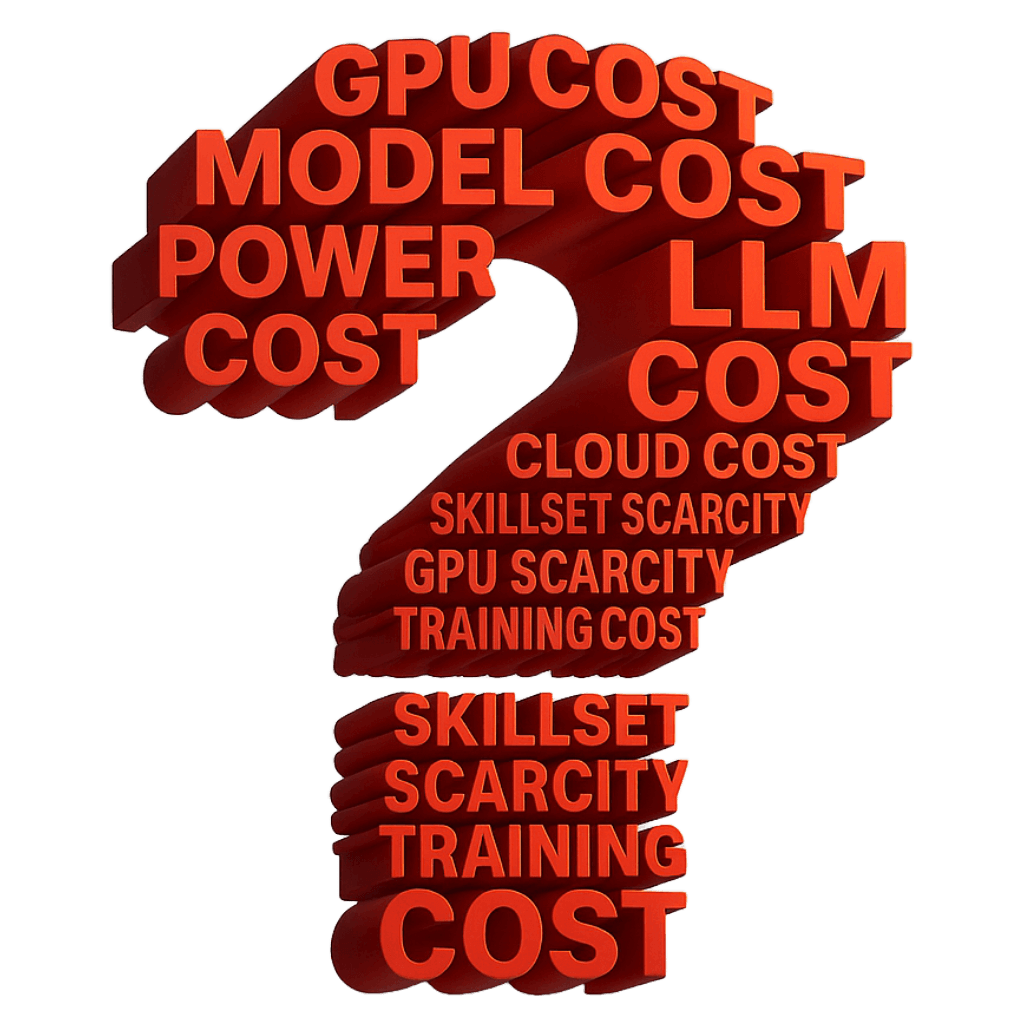

We help you optimize your GenAI stack to significantly reduce costs without compromising performance. By focusing on inference optimization, efficient resource utilization, right-sizing, and tuning for high goodput, we ensure every dollar spent delivers maximum value. Our clients save up to 60% on their GenAI infrastructure through intelligent, data-driven cost strategies.

Book A Consultation

Optimizing GenAI workloads requires more than just turning off idle resources — it demands a deep understanding of model behavior, infrastructure efficiency, and throughput economics. Our approach blends system-level optimization with model-aware tuning to deliver sustained cost reductions across inference, training, and deployment pipelines.

Inference Optimization: We reduce per-request compute cost by selecting the most efficient model size for the task, enabling mixed-precision inference, and leveraging optimized runtimes like Bud Runtime. We also integrate caching layers and batch processing to optimize token costs across calls.

Resource Efficiency & Autoscaling: Through fine-grained telemetry, we identify underutilized GPUs, CPU bottlenecks, and memory overhead across your stack. Our system recommends and enforces intelligent autoscaling policies, spot instance utilization, and horizontal vs. vertical scaling based on real-time demand patterns.

Right-Sizing & Goodput Maximization: We analyze your workload characteristics to match model deployment sizes (parameter count, quantization level) with use-case precision needs. By optimizing for goodput (useful tokens/sec per dollar) rather than raw throughput, we ensure you're not overpaying for excess compute that doesn't translate into actual business value.

Opt for domain and task specific fine-tuned Small Language Models instead of large models. Use quantized models to cut compute costs and reduce model size, maintaining accuracy while optimizing efficiency. These approaches balance performance with resource savings, especially during inference.

Contact Us

Hybrid inferencing combines Small Language Models (SLMs) on local hardware with Large Language Models (LLMs) in the cloud. By evaluating each generated token’s quality, it selectively uses the LLM only when necessary. This approach balances cost and performance, ensuring efficient, high-quality AI outputs while reducing cloud dependency.

Contact Us

Heterogeneous hardware inferencing uses a mix of CPUs, commodity GPUs, and high-end GPUs to serve large language models efficiently. By splitting tasks across available hardware, it reduces costs, improves resource utilization, and maintains performance—offering a smarter, more scalable alternative to GPU-only deployments.

Contact Us

Optimize prompts to minimize token usage by keeping them concise and efficient. Long prompts increase costs. Use system-level instructions strategically—reuse system prompts when possible and avoid redundancy in API calls to enhance performance and reduce resource consumption.

Contact Us

Cache common queries and responses to reduce repeated GenAI API calls and improve efficiency. Use deterministic prompting—structure prompts to yield consistent outputs—so cached results remain reliable and reusable. This approach lowers costs and enhances performance.

Contact Us

Monitor usage and costs by user and task to identify high-cost areas. Set thresholds and alerts to stay within budget. Regularly audit and refine model use based on performance, usage patterns, and business needs to ensure efficient and aligned GenAI operations.

Contact Us

Take a sneak peek at our successful AI projects completed for global clients. Contact our team for a full view of our AI project portfolio. VIEW ALL

Accubits developed Infinite Coach and Hybrid360, a suite of AI-powered leadership development tools that automate 360-degree feedback, personalize coaching insights, and enhance collaboration across organizations.

CaseScribeAI is an AI-powered platform developed for legal professionals handling VA disability, Social Security, and Personal Injury cases, aimed at reducing the time and effort required to process complex legal documents.

For DIFC courts, we built a system that could support natural language interaction, contextual document analysis, legal summarization, and data-driven insights across a variety of legal records.

Our tech expertise has earned the trust of top global brands and esteemed Federal agencies.

"They showed flexibility, and responsiveness throughout the project and were able to manage it effectively without facing any language barriers."

"Accubits brought a wealth of experience to the project and significantly enhanced our initial concepts."

"Accubits was proactive in responding to unexpected market demands and delivered high-quality results, while also providing helpful recommendations."

Drop us a word to book a no-obligation consultation to discover growth opportunities for your business

We’re recognized by global brands for our service excellence.

2022

2021

2019

2019

2018

2021

2022

2021

Any question or remarks? Just write us a message!

The first step towards greatness begins now, let's embark on this journey.

Share more information with us, and we'll send relevant information that cater to your unique needs.

Kindly share some details about your company to help us identify the best-suited person to contact you.